back to basics > operating leverage

i've liked thinking about businesses in terms operating leverage since i started looking at internet companies seven years ago. why? it's a great framework for both founders and investors to think about profitability, scalability, and the stage of maturation of a business. it's also just a neat concept.

operating leverage is the rate of change of operating profit with respect to revenue. (in calculus speak d Op Profit / d Revenue) it is bound by 1 on the low end and infinity on the high end.

operating leverage is not to be mistaken for financial leverage. financial leverage is usually understood as debt. borrowing allows firms and funds to generate higher returns on equity by increasing the total amount of resources they can marshal. operating leverage on the other hand is something that is more inherent to a given business model, and in particular its cost structure. let's start with the equation. there are several definitions, and i prefer the following:

operating leverage = (contribution margin) / (operating profit margin)

so a lemonade stand that sells 10k cups of lemonade at $4 each with a unit cost of $2 and total fixed costs of $5k (stands are expensive) has operating leverage of 1.33x

how do i get there?

10k cups of lemonade * $4 revenue per cup = $40k in revenue

$40k - (10k cups * $2 cost per cup) = $20k contribution profit

$20k contribution profit / $40k revenue = 50% contribution margin

$20k contribution profit - $5k fixed costs = $15k operating profit

$15k operating profit / $40k revenue = 37.5% operating profit

50% contribution margin / 37.5% operating profit = 1.33x

this means that for every 1x unit increase in revenue, operating profit increases by 1.33x

you can also think about operating leverage more simply,

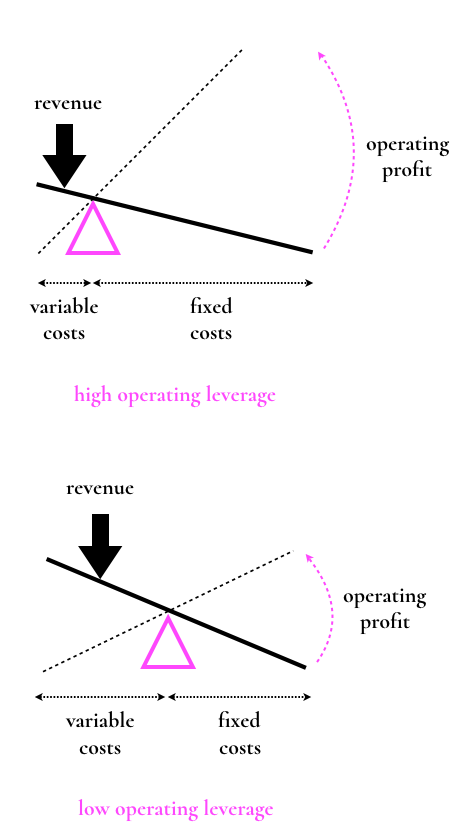

operating leverage = (fixed costs) / (total costs)

a company with a high proportion of fixed costs has high operating leverage. put another way, a company with a low proportion of variable costs also has high operating leverage.

why is that?

the classic example given for a business with high operating leverage was Microsoft back in the 90s. the R&D costs (developer salaries) incurred by creating enterprise software like Microsoft Word was relatively high even before a single CD of Microsoft Office was sold. once the software had been written, the incremental cost of each additional CD sold was essentially the cost of a blank CD (and the sales & marketing spend in order to get it onto store shelves and into companies). so Microsoft started in a hole of fixed costs, and each incremental copy of Office they sold was essentially pure profit. if they sold enough copies they dug themselves out of the hole and generated substantial profits on top.

other businesses that exhibit high operating leverage include gaming publishers (like EA or Supercell), software-as-a-service companies, pharmaceutical companies, and media consumer subscription companies (as long as they own the content they're selling).

i find operating leverage most helpful when used to compare two businesses within the same sector, like two software businesses offering the same service but one with an API-driven go-to-market and another focused on on-premise installations.

leverage can cut both ways, businesses with high operating leverage might be at risk of not recovering their fixed costs if a particular product or service doesn't perform well. it also makes the financial performance more sensitive to expectations and volatility in revenue growth (because a business with high operating leverage will recoup less overall costs in a downturn than a similar business with low operating leverage)

a short-hand way of determining whether a company has high operating leverage is to look at its gross margin. because COGS are generally variable costs, businesses with high gross margins also usually have high operating leverage (unless sales & marketing costs are unusually high).

as technology investors, most, but not all companies we look at have high operating leverage. incremental copies of the same strings of code have almost zero variable costs. API-driven businesses could have even lower variable costs than Microsoft used to have.

that said, people's attention increasingly does come at a cost. customer acquisitions cost (CAC) is a variable cost, and businesses with high CACs don't have high operating leverage. it's often not that simple to work out at an early stage what future CACs might look like. that tends to be a reason why venture investors like consumer businesses with an element of virality or strong network effects, but those deserve their own post.

in early stage venture, a companies cost structure is often still being built out, and it can be hard to ascertain whether or not particular company will have operating leverage. however, i still think the fixed cost vs variable cost lens is a really helpful way of thinking about businesses.